Fastai Lesson 1

Introduction to Deep Learning for Coders

Disclaimer:

In the unlikely event that the person reading this is not me, please make sure to check out the actual course “Deep Learning for Coders” over at course.fast.ai. These summaries are written with an audience of one - me - in mind, so proceed with caution.

What is this course?

I find myself at the onset of a learning journey which many other students before me have braved. The fast.ai Deep Learning for Coders course has become a staple in the machine learning educational universe and follows an approach to learning and practicing data science that resonates well with me.

In line with the co-founders’, Rachel Thomas’ and Jeremy Howard’s, recurring tenet to start blogging as a means of engraining knowledge and interacting with the international community, I have started this blog - more for myself than any wandering reader that might stumble upon this site.

The course is based on a few guiding principles inspired by an article by Ruth Walker (Walker, 2009) and essentially follows a top-down approach from usage to in-depth understanding.

This philosophy is one of the main drivers prompting my to take on the considerable time investment of following the course in the recommended, highly practice-focused, manner.

This first chapter of the course offers a comfortingly mild introduction to the subject of deep learning as a whole as well as the underlying philosophy of the course and the python library. Let’s start with the deep learning part:

What is deep learning?

Before delving into the murky waters of deep learning, Jeremy provides the equivalent of a friendly “Don’t Panic” lettering (Adams, 1995) in the form of two statements:

- To become an effective deep learning practitioner, you do not need lots of math, lots of data and lots of prohibitively expensive computers.

- What you do need, however, is a GPU. And it should be on linux, it should be NVIDIA and it should be virtual.

The thinking behind the latter statement is not only the compatibility issues with non-CUDA GPUs but also in the interest of not getting side-tracked with system administration efforts instead of focusing on building models.

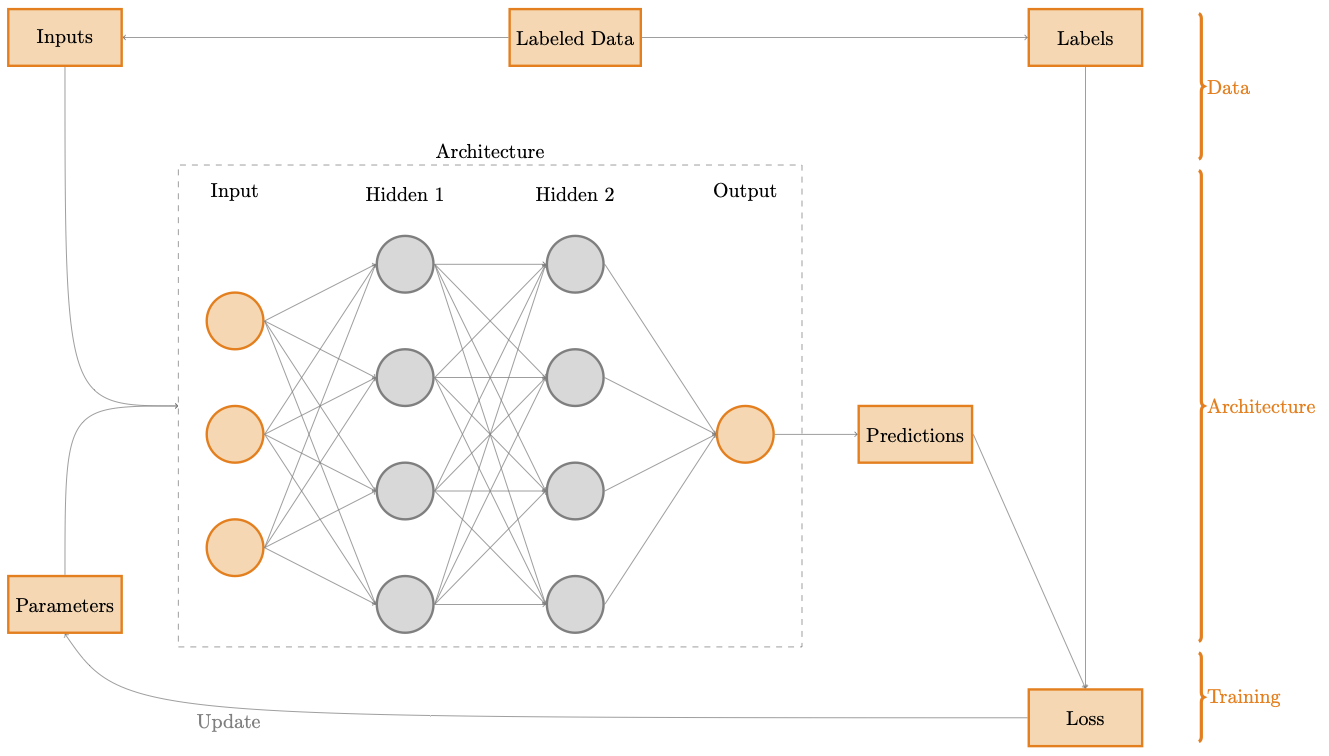

The deep learning process and its established vocabulary is presented in the below graphic:

The center and the functional form of a model is its architecture. The inherent set of weights are called parameters. The output of the application is the set of predictions. These are calculated on the inputs of the model, namely the data, not including the labels. The labels, i.e. dependent variables, are used in conjunction with the predictions to calculate the loss. This is the measure of performance, which is used to update the labels - the actual learning process.

The actual model is then simply the architecture with the set of parameters achieved through learning process.

How to build a model?

The layered architecture of the fastai library (Howard & Gugger, 2020) harmonizes beautifully with the teaching approach of the course. Using the top level of the architecture provides a very helpful latticework to think through the steps involved in training a model:

The below code performs all three key parts of the deep learning process introduced above:

- A labeled dataset of cat and dog breeds called the Oxford-IIIT Pet Dataset is downloaded and saved to a folder on disk

- A model that has been pre-trained on 1.3 million images is downloaded from the internet

- The model is fine-tuned to the binary classification task of recognizing cats and dogs

All this happens in six lines of python code:

from fastai.vision.all import *

path = untar_data(URLs.PETS)/'images'

def is_cat(x):

return x[0].isupper()

dls = ImageDataLoaders.from_name_func(path,

get_image_files(path),

valid_pct=0.2,

seed=42,

label_func=is_cat,

item_tfms=Resize(224))

learn = cnn_learner(dls, resnet34, metrics=error_rate)

learn.fine_tune(1)

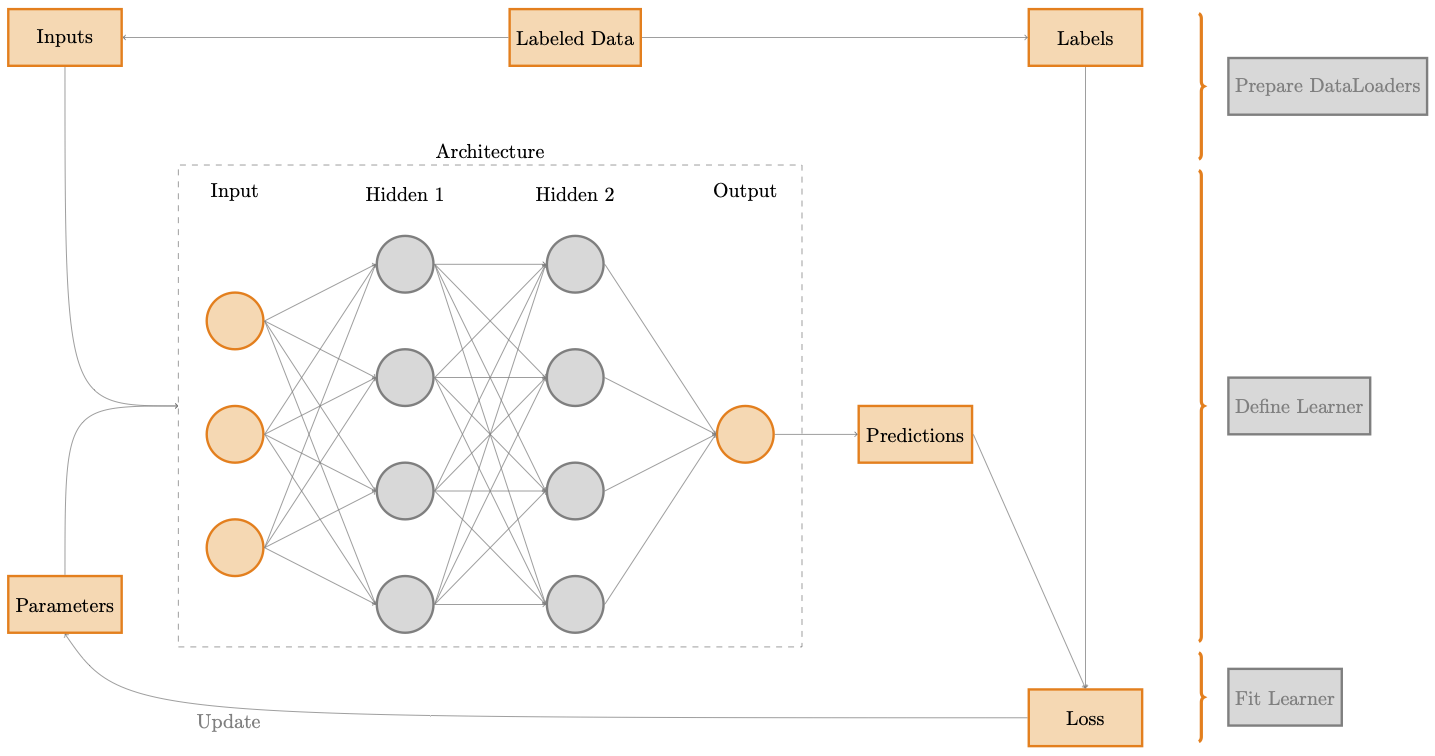

Before examining each line individually, it helps to compare them to the first graphic in the interest of “playing the entire game” (Walker, 2009):

Lets go through each line in detail:

from fastai.vision.all import *

The fastai library is organized by task and is optimized in order to allow the somewhat unconventional wildcard importing style. Accordingly, the first line imports all of the main functions required for training computer vision models.

path = untar_data(URLs.PETS)/'images'

The function untar_data fetches a dataset in the form of a tar archive, extracts the individual files and returns the file path under which they where saved. In this case, it is a predefined dataset of pet images from https://s3.amazonaws.com/fast-ai-imageclas/oxford-iiit-pet.tgz. This explains the syntax of having the function call directly with the folder name.

def is_cat(x):

return x[0].isupper()

dls = ImageDataLoaders.from_name_func(

path, get_image_files(path), valid_pct=0.2, seed=42,

label_func=is_cat, item_tfms=Resize(224))

Data preparation in fastai generally takes the form of the DataLoaders class. In this case the vision-specific wrapper ImageDataLoaders is used to prepare the datasets.

The method from_name_func specifies the label for each image based on a function. In this case is_cat is the labeling function. As the method name implies, this function takes the file name as an input and returns True whenever the first character of the file name is upper case.

The most important parameter to mention here is valid_pct=0.2. This tells fastai to hold out 20% of the data and not use it for training at all. This is called the validation set, while the remaining 80% of data are the training set (20% is the default value in fastai).

The validation set is used to measure the performance of the model, so as to base the evaluation on unseen data. By default the 20% held out for validation are selected randomly (the seed=42 argument leads to the same selection every time the code is run).

The first two steps only need to be run once. If you run the cell again, it will use the dataset and model that have already been downloaded, rather than downloading them again.

learn = cnn_learner(dls, resnet34, metrics=error_rate)

The fifth line of the code tells fastai to create a convolutional neural network (CNN) and specifies what architecture to use (i.e. what kind of model to create), what data we want to train it on, and what metric to use.

A metric is a function that measures the quality of the model’s predictions using the validation set, and will be printed at the end of each epoch. For additional details, see the digression later in this text.

There are some standard architectures that work most of the time, and this example uses one called ResNet. It is both fast and accurate for many datasets and problems. The 34 in resnet34 refers to the number of layers in this variant of the architecture (other options are 18, 50, 101, and 152)

Models using architectures with more layers take longer to train and are more prone to overfitting, however, using more data, they can be significantly more accurate.

Recall that the example is based on a pre-trained model. The pre-trained parameter of cnn-learner defaults to True. This sets the parameters in the model to values that have already been trained by experts to recognize a thousand different categories across 1.3 million photos (using the famous ImageNet dataset).

You should nearly always use a pre-trained model, because it means that your model, before you have shown it any data, is already very capable. Looking at how basic the patterns in the early layers of a CNN network are in the representations of (Zeiler & Fergus, 2014), shows how pre-trained models can be helpful as a basis for fine-tuning to different tasks.

When using a pre-trained model, cnn_learner will remove the last layer (the head), since that is always specifically customized to the original training task (i.e. ImageNet dataset classification) and replace it with one or more new layers with randomized weights of an appropriate size for the dataset you are working with.

Using pretrained models is the most important method we have to allow us to train more accurate models, more quickly, with less data, and less time and money

learn.fine_tune(1)

The sixth line of our code tells fastai how to fit the model.

In order to fit a model, we have to provide at least one piece of information: how many times to look at each image (known as number of epochs)

The number of epochs you select will largely depend on how much time you have available, and how long you find it takes in practice to fit your model

When you use the fine_tune method, fastai will use these tricks for you. There are a few parameters you can set (which we’ll discuss later), but in the default form shown here, it does two steps:

- Use one epoch to fit just those parts of the model necessary to get the new random head to work correctly with your dataset.

- Use the number of epochs requested when calling the method to fit the entire model, updating the weights of the later layers (especially the head) faster than the earlier layers (which, as we’ll see, generally don’t require many changes from the pretrained weights).

Digression: metrics, loss and overfitting

Loss is not the same as error

For someone new to deep learning, it might be easy to confuse the concepts of loss and error, but there is an important distinction:

- A metric works on an epoch level and is designed for human consumption, i.e. it summarizes the performance of the model after each epoch based on the validation set. In the example,

error_rateis used as the metric. This is simply the percentage of incorrectly predicted categories. Another common metric isaccuracy, which is simply1 - error_rate. - A loss function needs to be much more fine-grained, since its purpose is to define a measure of performance that the training system can use to update its parameters automatically and, accordingly, needs to change with the slightest change in parameters. A good choice for loss is a choice which is easy for stochastic gradient descent to use

The metric is used on the validation set in order to measure overfitting. The longer you train for, the better your accuracy will get on the training set; the validation set accuracy will also improve for a while, but eventually it will start getting worse as the model starts to memorize the training set, rather than finding generalizable underlying patterns in the data. When this happens, we say that the model is overfitting.

Overfitting is the single most important and challenging issue for all machine learning practitioners and algorithms! When you train a model, you must always have both a training set and a validation set, and must measure the accuracy of your model only on the validation set. If you train for too long, with not enough data, you will see the accuracy of your model start to get worse; this is called overfitting (Howard & Gugger, 2020).

In order to be even more rigorous, you could set aside a third subset of data, the test set, which is used neither for fitting nor for metrics but only when the entire project is complete. This is done in Kaggle competitions, for example.

So now that we have a trained model, fastai allows inference using the predict method of the learner. The predict method returns three values:

- The prediction

- The prediction’s index in the vocabulary

- The corresponding probability

The below code shows an example inference based on an image uploaded in a Jupyter Notebook:

img = PILImage.create(uploader.data[0])

is_cat,_,probs = learn.predict(img)

print(f"Is this a cat?: {is_cat}.")

print(f"Probability it's a cat: {probs[1].item():.6f}")

Where to go from here?

We’ve gone into quite some detail for a single example of a specific model trained on a specific task on a specific dataset using a specific architecture using a very high level Python API. How is this relevant for learning the vast field of deep learning? Well, as indicated above, the API is structured in a way that provides an insightful framework which aligns beautifully to the general endeavor of training a machine learning model. Accordingly, the same steps recur for a wide range of problems:

Want to train an image segmentation model?

path = untar_data(URLs.CAMVID_TINY)

dls = SegmentationDataLoaders.from_label_func(path,

bs=8,

fnames=get_image_files(path/'images'),

label_func = lambda o: path/'labels'/f'{o.stem}_P{o.suffix}',

codes = np.loadtxt(path/'codes.txt',

dtype=str)

learn = unet_learner(dls, resnet34)

learn.fine_tune(8)

Want to build a classifier on tabular data without a pre-trained model?

from fastai.tabular.all import *

path = untar_data(URLs.ADULT_SAMPLE)

dls = TabularDataLoaders.from_csv(path/'adult.csv',

path=path,

y_names="salary",

cat_names = ['workclass', 'education',

'marital-status', 'occupation',

'relationship', 'race'],

cont_names = ['age', 'fnlwgt', 'education-num'],

procs = [Categorify, FillMissing, Normalize])

learn = tabular_learner(dls, metrics=accuracy)

learn.fit_one_cycle(3)

Want to build a collaborative filtering model?

from fastai.collab import *

path = untar_data(URLs.ML_SAMPLE)

dls = CollabDataLoaders.from_csv(path/'ratings.csv')

learn = collab_learner(dls, y_range=(0.5,5.5))

learn.fine_tune(10)

All in all, both the course and the library seem like a more than worthwhile pursuit and I am excited about the upcoming installments of this series of posts.

References:

- Walker, R. (2009). Education at Bat: Seven Principles for Educators. https://www.gse.harvard.edu/news/uk/09/01/education-bat-seven-principles-educators

- Adams, D. (1995). The Hitchhiker’s Guide to the Galaxy.

- Howard, J., & Gugger, S. (2020). fastai: A Layered API for Deep Learning. ArXiv, abs/2002.04688.

- Zeiler, M. D., & Fergus, R. (2014). Visualizing and Understanding Convolutional Networks. ECCV.

- Howard, J., & Gugger, S. (2020). Deep Learning for Coders with fastai and PyTorch. O’Reilly Media.